Building a Culture Centered on User Feedback at Google Meet

Written on

Chapter 1: The Importance of User Feedback

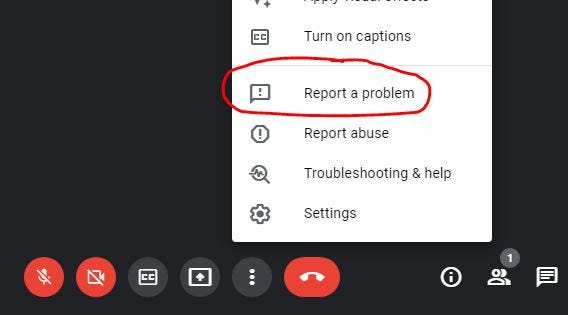

Did you know that you can provide feedback directly within any Google product? For instance, Google Meet offers a “Report a problem” feature that allows users to submit screenshots and descriptions to the engineering team. Curious about how this feedback is processed? Let me explain.

Creating Google Meet was a significant achievement, but developing the feedback system—dubbed Doctor Thor—was one of my most rewarding technical contributions. The project, co-created with a teammate known as Jay, allowed us to diagnose issues effectively. While I contributed the ideas and management, Jay implemented the technical aspects, which played a crucial role in his promotion to L6 Staff Software Engineer.

Note: This article is quite detailed and technical. If that piques your interest, keep reading!

A Note to My Colleagues: For those on Blind who suggested that I was focused on flashy projects rather than user needs, I encourage you to read on.

Chapter 2: Origins of the Feedback System

In mid-2016, we prepared Google Meet for an Open Beta phase, enabling select Google Cloud clients under NDA to start using it. I was eager to uncover any issues they faced, so I immersed myself in user feedback.

We had been integrating with the Google Feedback system since the days of Hangouts Video Calling in 2010. As a frontend engineer, I typically relied on backend teams to troubleshoot video calling problems. Engineers took turns analyzing feedback reports, but it was generally seen as a tedious task.

While reviewing the feedback reports, I discovered that we categorized internal Google feedback differently from external customer feedback. Internally, we generated bug reports that were triaged weekly. However, external feedback was largely ignored, as we outsourced it to contractors for statistical analysis. Unfortunately, this meant that engineers rarely delved into external feedback from Google Hangouts, which often contained spammy and low-quality submissions.

Recognizing that Google Meet, being a new enterprise product, had more valuable feedback, I decided to enable bug creation for all users and personally review every report. With around 100 feedback reports daily during the Open Beta, Jay and I managed to process them in our spare time. However, projections indicated that we could see over 3,000 reports daily at full launch. A more efficient solution was necessary.

Chapter 3: Enhancing Feedback Processing

When a user submits feedback, various data points, such as the operating system, browser, and application-specific details, are uploaded alongside the user's message and screenshot. The Google Feedback team provided essential infrastructure for Meet, Gmail, YouTube, and more.

For Google Meet web clients, the additional Product Specific Data (PSD) included camera and microphone configurations, user settings, JavaScript errors, and real-time statistics.

Initially, I manually translated impression IDs to understand what transpired during video calls, which was labor-intensive. After examining the code, we discovered that the original developer had implemented a circular queue that was not optimal. We increased the queue size, allowing for a coherent sequence of events in feedback reports.

Jay and I also recognized that while WebRTC provided numerous statistics, client-side processing was minimal. Previously, we only collected data every 10 seconds, making real-time debugging nearly impossible.

Discovering New Tools: With nearly a decade at Google, I had become adept at navigating internal tools. Engineers could search based on PSDs or impression data, deobfuscate JavaScript errors, and chart impressions to identify trends.

Recognizing the steep learning curve for newer engineers, we aimed to streamline the process.

Chapter 4: Developing Doctor Thor

Doctor Thor began as a simple Chrome Extension developed by Jay. It parsed and manipulated the DOM on internal feedback pages, with contributions from other Meet Web team engineers. This tool allowed us to convert impression IDs into readable formats, color-code them based on severity, and link to relevant code searches and charts.

For PSDs containing complex JSON data, we formatted them for easier reading, which significantly enhanced our ability to identify anomalies. We also integrated deep links to various internal tools, aiding in debugging and helping new engineers familiarize themselves with the resources available.

A teammate even remarked that Doctor Thor made him excited to be on-call, as it empowered engineers with advanced debugging capabilities. Notably, engineers who installed the extension received special badges on their internal teams page, encouraging widespread adoption.

Chapter 5: The Introduction of Monitors

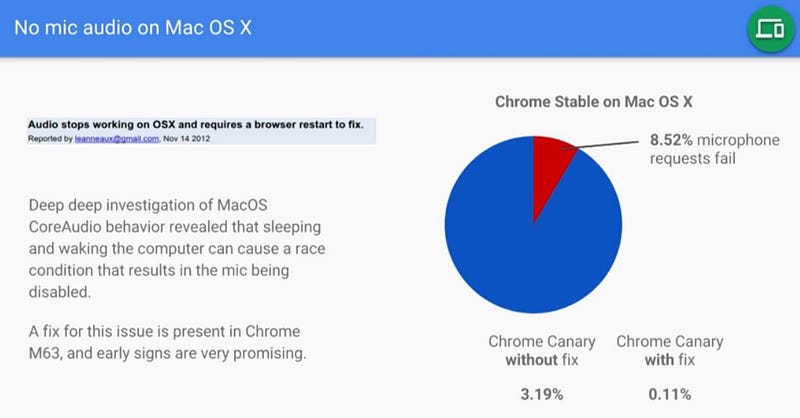

In early 2017, a PM Director shared a blog post from the video calling platform appear.in, discussing a known microphone issue on Macs. We could see feedback from Mac users reporting microphone failures, but identifying the specific cause was challenging.

To tackle this, we developed monitors—JavaScript classes that analyzed real-time WebRTC statistics and generated alerts when abnormal levels were detected. This innovation marked a significant improvement over the previous 10-second data collection method.

When users submitted feedback, each monitor could upload its data as PSD, allowing the Doctor Thor extension to visualize time series data effectively. We monitored various parameters, such as battery levels, CPU usage, and audio levels, providing unprecedented visibility into user experiences.

Chapter 6: Optimizing Feedback Processing

Jay and I discovered that the Google Feedback tool allowed us to tag reports with labels, making it easier to organize and triage them. We created over 100 labeling rules, identifying patterns in user requests and enabling contractors to duplicate similar feedback for master bug reports.

This transformation turned a chaotic collection of feedback into a structured list of user-requested features and quality issues.

Addressing the Mac Microphone Issue: With our new tools, we confidently identified and addressed the long-standing microphone problem affecting Mac users. By implementing a dialog that guided users on how to restart Chrome, we saw a decrease in reports related to this issue.

Despite our temporary fixes, the root cause remained until a member of the WebRTC team investigated further, leading to a bug report and eventual resolution from Apple.

Watch the full story here:

Chapter 7: User-Centric Improvements

Resolving the Mac audio issue bolstered our credibility among engineering and product management teams. Weekly meetings were established to review user feedback trends, helping us prioritize improvements.

We made significant strides, raising the join success rate from 97% to 99.4% and reducing echo during calls by automatically pairing audio devices.

When discussing our successes with the Eng VP overseeing multiple teams, he expressed curiosity about why other teams were not implementing similar user-focused processes. Despite our outreach, only one team engaged with us.

As leadership changed, I continued to advocate for our feedback system, presenting sorted lists of user-requested features. Unfortunately, this effort often went unrecognized, as addressing bugs was not a priority.

Top User Requests: Between 2018 and 2019, user requests included free consumer meetings, in-meeting chat, audio/video merging, moderation tools, and more. Notably, consumer meetings were the top requested feature.

It wasn't until the COVID-19 pandemic that Google Meet gained the attention it needed, leading to the launch of several highly requested features.

Chapter 8: Adapting to the Surge in Usage

During the COVID-19 pandemic, Meet's usage skyrocketed, with daily feedback reports increasing to over 40,000. Fortunately, we had already anticipated such a surge and developed a solution within Doctor Thor.

Utilizing two years of historical feedback as training data, Jay implemented an AI-powered triaging system. This allowed us to categorize feedback effectively, distinguishing specific audio, video, and screensharing issues.

While the AI couldn't handle every report, it effectively filtered out spam and known issues, allowing us to focus on more complex tasks. Unfortunately, after Jay's departure from my team, we faced challenges in sustaining resources for quality improvements.

Chapter 9: A Final Note on Teamwork

Before my retirement, I took the opportunity to commend Jay for his remarkable contributions, which greatly enhanced our understanding of user feedback.

Despite the challenges we faced, working with Jay on Doctor Thor marked a golden age for the Meet Web team. Our commitment to understanding user frustrations and improving their experiences fostered a strong team culture.

Reflecting on my experiences, I realize that building a user-centric culture requires unwavering leadership support.

In a remote hotel stay, I was reminded of the essence of service—ensuring that users can effectively connect and communicate. Listening to users is crucial for any product's success.

If you found these insights valuable, please consider giving this a clap, following my account, or joining Medium as a member. Thank you!