Unraveling the Enigma of AI's Black Box Mystery

Written on

Chapter 1: Understanding the Black Box Problem

The black box dilemma represents a significant enigma in the realm of artificial intelligence. We may grasp the inputs and outputs of a system, yet the internal mechanisms that inform AI's decision-making remain elusive. For instance, when tasked with patient diagnosis, what leads an AI to determine one individual is ill while another is healthy? Are these assessments based on criteria a medical professional would validate? The necessity of demystifying the black box is particularly crucial in healthcare scenarios where lives are at stake. In other contexts, comprehending these decision-making processes is vital for legal matters, autonomous vehicles, or refining machine learning frameworks.

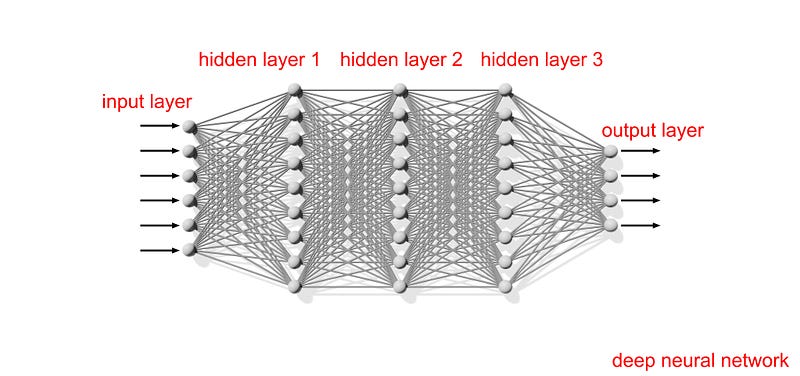

Understanding how decisions are made is as important as the decisions themselves. Researchers are making strides in uncovering the secrets of the black box, akin to mapping the intricacies of the human mind. After all, neural networks draw inspiration from our brain's structure. When our brain recognizes an object like a horse saddle, specific nerve cells activate in response to that stimulus. Different visual stimuli will trigger distinct patterns of neuronal activity. Similarly, deep neural networks respond variably based on the numerical inputs provided. However, unlike human neurons, those in a deep neural network are mathematical functions that process numerical data. These neurons conduct calculations, and if they surpass a certain threshold (referred to as a weight), the input is relayed to additional neuron sets for further processing. Additionally, machines utilize a method called "back propagation," allowing information to flow backward through the network when errors occur, enhancing the learning process by refining data from the output back to the hidden layers.

Section 1.1: The Complexity of Machine Learning Systems

Not all machine learning models are characterized by their opacity. Their complexity varies based on the tasks they perform and the layers of calculations they apply to inputs. It's the deep neural networks that process vast amounts of data—spanning millions or billions of numbers—that often become enshrouded in mystery.

To begin demystifying this complexity, we must first evaluate the inputs and their influence on the outputs. Saliency maps serve as valuable tools in this analysis. They illustrate which elements of the input—like an image—are most significant to the AI's focus. In certain instances, the system may even employ color coding to represent the varying degrees of importance of different image segments in arriving at its final conclusion. AI often considers not just the primary object but also its surrounding context, much like using contextual hints to discern the meaning of an unfamiliar word.

Covering portions of an image can lead the AI to alter its conclusions. This technique aids researchers in understanding the rationale behind the AI's decisions and whether those decisions are justified. For example, early iterations of autonomous vehicle AI sometimes opted to make left turns based on weather conditions, having been trained on data where the sky's color was consistently a specific hue. The AI mistakenly interpreted this lilac shade as a critical factor for turning left.

Subsection 1.1.1: The Role of Generative Adversarial Networks

What if we could employ AI to comprehend AI? Generative adversarial networks (GANs) consist of two networks, each with distinct roles. One network generates data, often in the form of images, while the other assesses whether the data is authentic. Essentially, the second network aims to determine if an image, such as a strawberry, was captured by a human or created by the first network. Through this interplay, the first network learns to produce increasingly realistic images to consistently deceive the second network. Researchers can then observe which aspects of an image are modified to enhance its believability, thus gaining insights into the AI's focus areas.

However, the workings of these networks remain somewhat enigmatic, as the exact processes behind the first network's image generation are not fully understood. GANs can create outputs that appear genuinely human-like, whether it's a facial image or a piece of writing, raising both fascination and concern in the artistic domain where machines seem to convey a semblance of soul.

Section 1.2: Specialization in Neural Networks

Researchers believe that GANs achieve their success by specializing their neurons. Different neurons may focus on generating specific elements—some on buildings, others on flora, and still others on details like windows and doors. For AI systems, context clues serve as powerful aids. If an object features a window, it is statistically more likely to be classified as a room rather than a cliff. The presence of these specialized neurons enables computer scientists to adjust the system for improved flexibility and efficacy. For example, if an AI has only recognized flowers within vases, it can be trained to identify a clay pot as a vase if the programmer understands which aspects of the model to modify.

While these explanations provide some clarity, they must be rigorously tested for accuracy. If certain image sections are believed to significantly influence the AI's conclusions, altering those segments should yield markedly different results. Thus, it’s essential not only to assess the system itself but also to validate our interpretations to ensure their correctness. Even if our explanations make logical sense, that does not guarantee their truth. AI operates like an enigmatic entity—human in form and behavior yet often governed by a logic that diverges from our own.

Chapter 2: The Challenges of Understanding AI

Grappling with the intricacies of neural networks is a demanding endeavor. Not every researcher considers it a worthwhile pursuit, particularly when dealing with more intricate networks that some deem too complex to unravel. This perspective suggests that we may need to place our trust in their decision-making processes without fully comprehending their inner workings.

Integrating AI into critical fields such as law and medicine poses its own set of challenges. Humans are inherently cautious beings, and if we struggle to place trust in one another, it becomes even more difficult to do so in neural networks with which we are just beginning to coexist. The quality of an AI's explanation may not significantly influence our trust.

Nevertheless, the interrelationship between humans and AI is rapidly evolving. Just as AI systems depend on us for learning and development, we too are gaining insights from them. For instance, biologists may leverage AI to forecast gene functions, discovering the significance of previously overlooked sequences as they analyze the system's predictive processes.

Whether the AI's black box can ever transform into a transparent glass box remains uncertain, yet we are undeniably on a trajectory of dependency. As neural networks grow in sophistication and capability, many researchers speculate that a comprehensive understanding of how these systems learn and innovate may remain elusive. Regardless of whether we obtain clear explanations, the interplay between human and machine continues to deepen, with both parties remaining enigmatic to each other.