Understanding Cache Memory Structures: A Comprehensive Guide

Written on

Chapter 1: Introduction to Cache Memory

Cache memory is essential for enhancing the speed and overall performance of contemporary computers. Having previously discussed the fundamentals of cache memory and its underlying principles of locality, we are now prepared to delve into the various structural designs of cache memory. Let’s get started!

Setting the Scene

Before we embark on this exploration, it’s crucial to establish some foundational rules to aid our understanding.

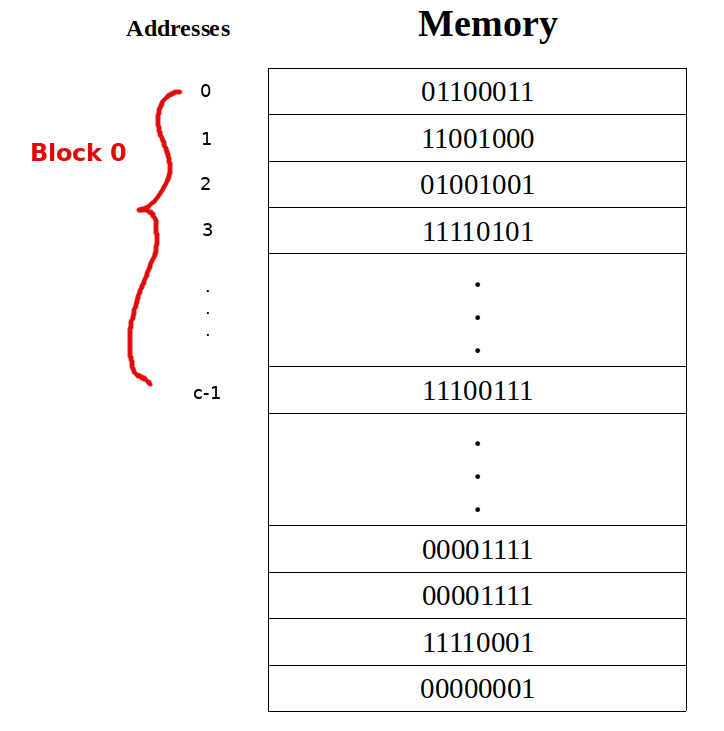

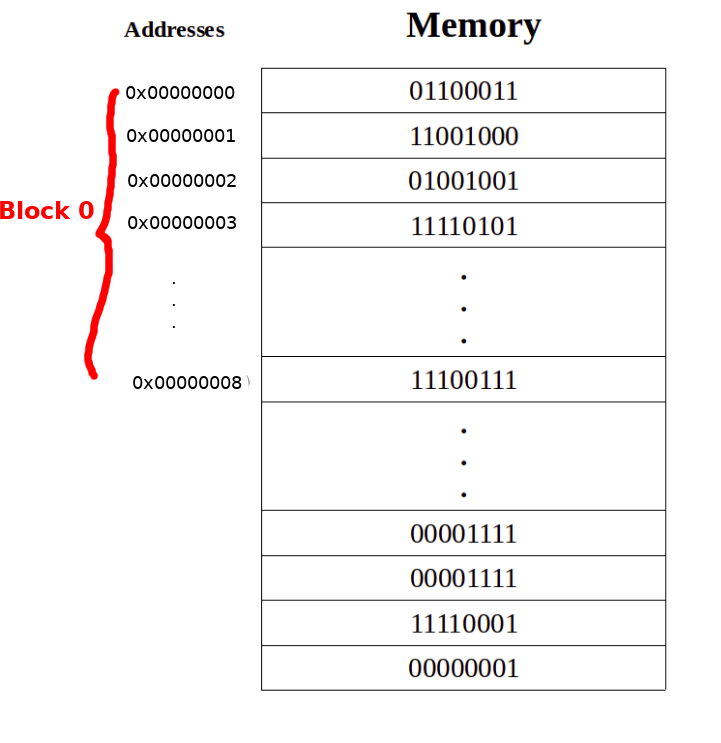

Firstly, we assume that our primary memory is byte-addressable, meaning each memory cell contains a single byte (1 byte = 8 bits), which is the minimum unit of data we can access. Memory is organized into blocks, which are groups of these memory cells.

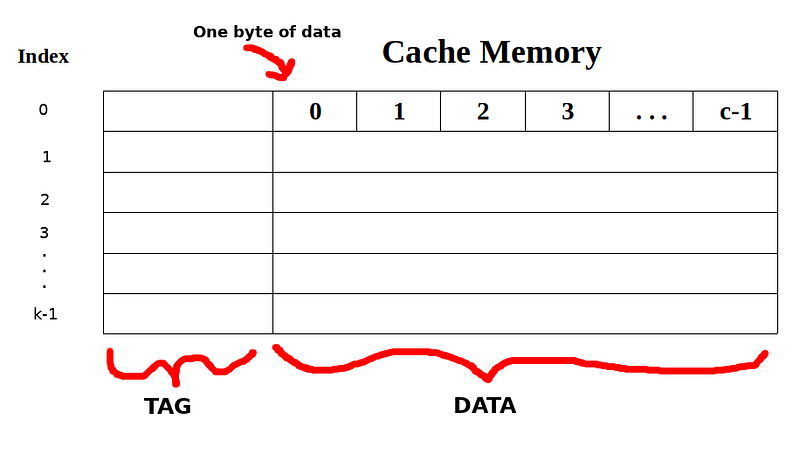

Similar to main memory, cache memory can be viewed as an array of cells known as "cache lines." Each cache line is indexed by a number and consists of two columns: one for the "Tag" and another for the "Data." As previously noted, data is transferred from main memory to cache in blocks. We will assume that the size of each cache line corresponds to the size of a memory block, allowing a complete block of data to be contained within a single cache line.

The tags in each line help the cache identify where the data originated in main memory, but the specifics depend on the type of cache employed. We will discuss tags further in the subsequent sections.

Now, let's examine the various types of cache memory structures.

Mapping Schemes

Since cache memory is significantly smaller than main memory, a question arises whenever we want to transfer a block from memory to cache: where should we place this block within the cache? The method of deciding where to store a memory block in cache is known as "mapping." Given the larger size of main memory, it is logical that multiple memory blocks may map to the same cache line. In all mapping schemes we will discuss, the memory address of the block plays a crucial role in determining its cache placement.

There are three primary mapping types for cache memories, each with its own advantages and disadvantages. Each mapping scheme uniquely defines the cache's structure.

Direct Mapping

Direct mapping is a scheme where each memory block is assigned to exactly one specific cache line. To understand this further, let’s break it down step by step.

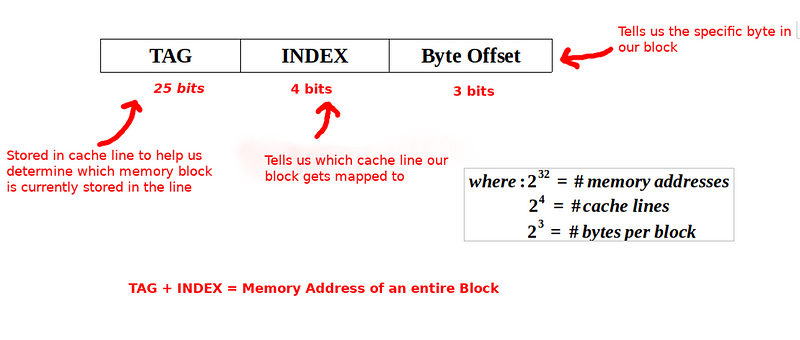

The size of the memory address (in bits) is determined by the physical size of the main memory. For instance, a computer with 4 GB of RAM will have addresses represented in 32 bits.

Suppose we have a 4 GB system with each block comprising 8 bytes. The cache consists of 16 lines.

The first 29 bits of a memory address identify the block to which a specific byte belongs. When mapping this memory address to a cache line, we utilize bits in positions 25-28 to determine which of the 16 lines the block will occupy. However, this method results in any addresses with identical bits in these positions mapping to the same line, necessitating a mechanism to identify the specific block transferred from main memory to cache. This is achieved by storing the remaining 25 bits of the address alongside the data in the cache line, referred to as the "tag."

Mathematically, the operation performed by a direct-mapped cache to determine the mapping of blocks to lines is expressed as follows:

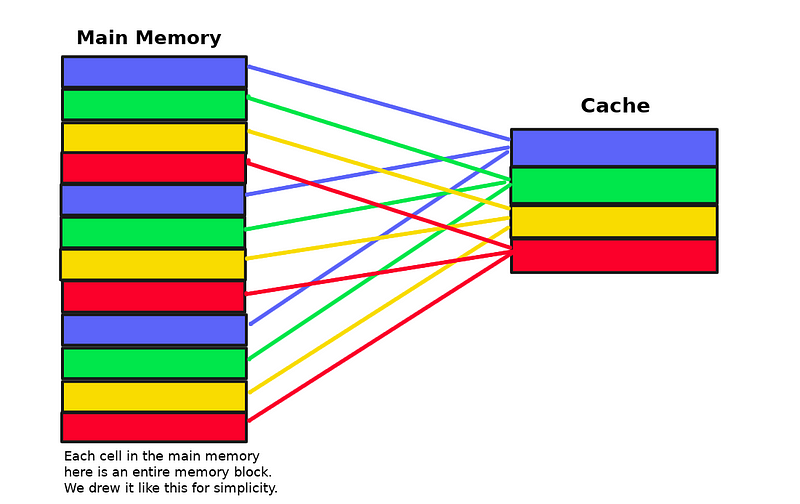

(Block Address) % (number of cache lines)

The illustration above demonstrates the modulo operation in action. The first memory block maps to the first cache line, the second to the second line, and so on. Upon reaching the fifth memory block, the mapping cycles back to the first cache line.

Fully Associative Mapping

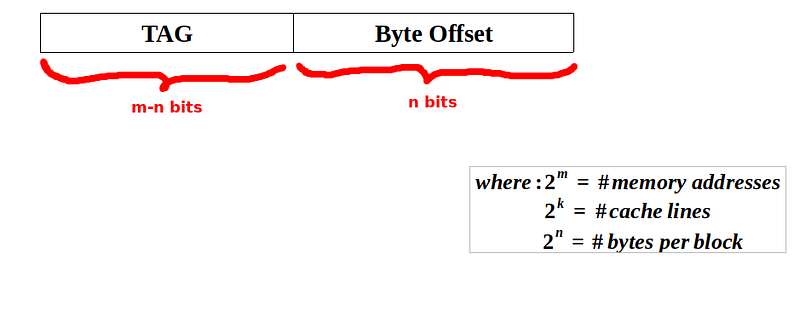

Fully associative mapping allows any memory block to be placed in any available cache line. Each memory address is divided into two parts: the "tag" and the "byte offset." Since memory blocks can occupy any cache line, there is no need for an "index" portion as seen in direct mapping.

Here, the "tag" portion is stored with the data in the cache. The storage requirement in a fully associative cache is typically greater than in a direct-mapped cache due to the larger tag size.

Set Associative Mapping

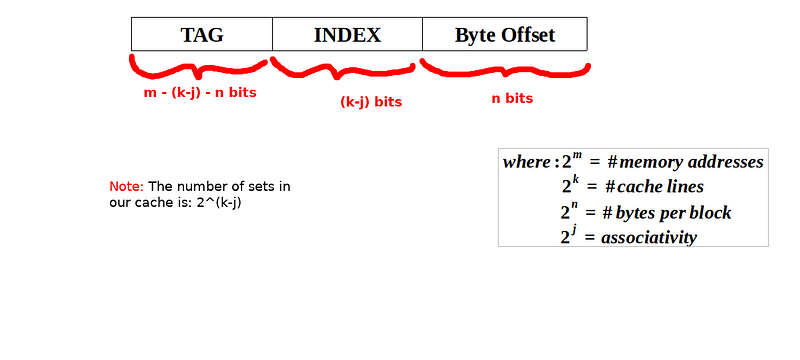

Set associative mapping divides the cache into several sets of equal size. Memory blocks are mapped to a specific set using direct mapping logic, but within that set, they can occupy any available cache line. This method blends aspects of both direct and fully associative mapping. The number of cache lines in each set is usually a power of two, referred to as the cache's associativity. If there are k lines in each set, the cache is termed a k-way set-associative cache.

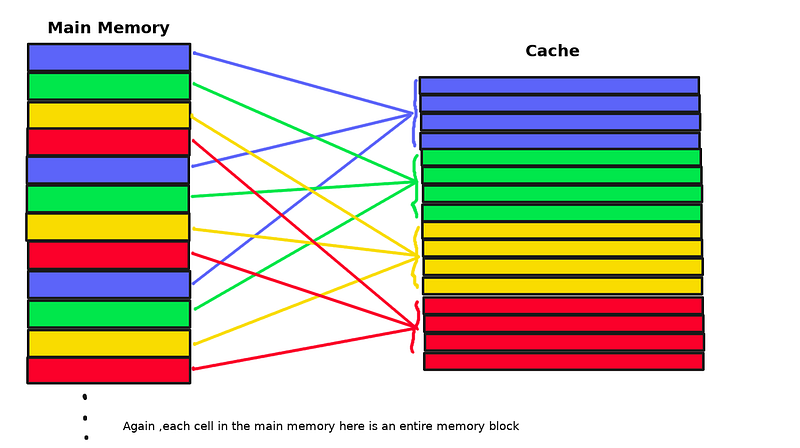

Below is an illustration of how mapping occurs in a set-associative cache.

As illustrated, each memory block maps to a specific set using direct mapping logic, while within that set, it can occupy any of its available cache lines.

Final Thoughts

In this article, we examined the three distinct mapping schemes utilized in modern cache memory. These schemes help determine how each memory block is mapped to a cache line, each offering unique advantages and disadvantages that we will explore in future articles.

Thank you for reading! If you have feedback or suggestions for future topics, please leave a comment or reach out!

This lecture covers the fundamentals of memory hierarchy and caching techniques, providing essential insights into cache memory structures.

This lecture offers a comprehensive overview of caching in computer architecture, focusing on its significance and implementation in modern systems.