How Meta Enhanced Their Cache Consistency to 99.99999999

Written on

Introduction to Cache Consistency

Caching plays a vital role in computer systems, spanning from hardware to operating systems, web browsers, and particularly backend development. For a company like Meta, effective caching is crucial for minimizing latency, managing heavy workloads, and cutting costs. However, the extensive reliance on caching also brings challenges, particularly around cache invalidations.

Over the years, Meta has significantly enhanced its cache consistency, elevating it from 99.9999 (six nines) to 99.99999999 (ten nines). This means that fewer than 1 in 10 billion cache writes would be inconsistent within their cache clusters. In this article, we will delve into four primary areas:

- What are cache invalidation and cache consistency?

- Why does Meta prioritize cache consistency to such a degree that six nines are insufficient?

- How Meta’s monitoring system contributed to improved cache invalidation and consistency, along with bug resolution.

Understanding Cache Invalidation and Consistency

A cache is not the definitive source of truth for data; therefore, it is essential to actively invalidate stale cache entries when the underlying data changes. If the invalidation process is mishandled, it could leave inconsistent values in the cache that diverge from the actual data source.

So, how can we effectively manage cache invalidation? One approach is to implement a Time-To-Live (TTL) mechanism to ensure freshness, thus avoiding invalidations from external systems. However, in the context of Meta’s cache consistency, we will assume the invalidation is managed by mechanisms beyond the cache itself.

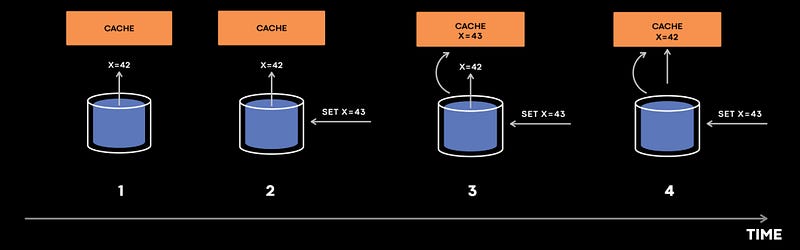

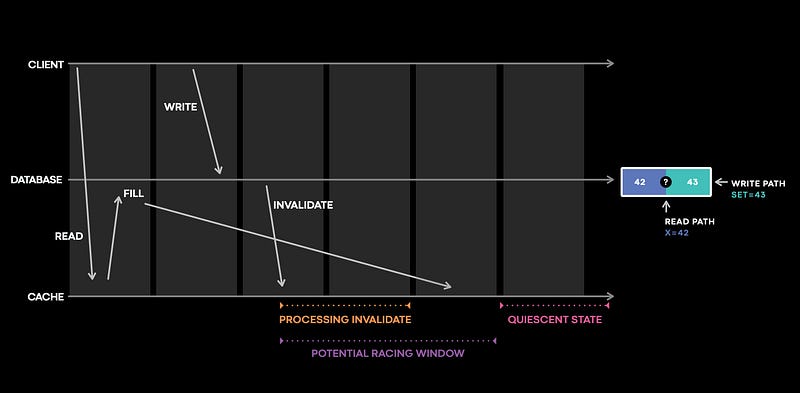

To illustrate how cache inconsistencies can occur:

Assuming timestamps increase sequentially (1, 2, 3, 4), the cache attempts to retrieve a value from the database. If an operation updates the database before the value x = 42 is cached, the database sends an invalidation event for x = 43, which arrives before x = 42. Consequently, the cache is set to 43 first, leading to an inconsistency when the x = 42 event is processed afterward.

To mitigate this issue, a versioning system can be employed for conflict resolution, ensuring older versions do not overwrite newer ones. While this solution may suffice for many companies, Meta's extensive scale and system complexity require more robust strategies.

Why Cache Consistency is Critical for Meta

From Meta's standpoint, cache inconsistencies can be as detrimental as data loss in a database. For users, such inconsistencies can severely impact the overall experience.

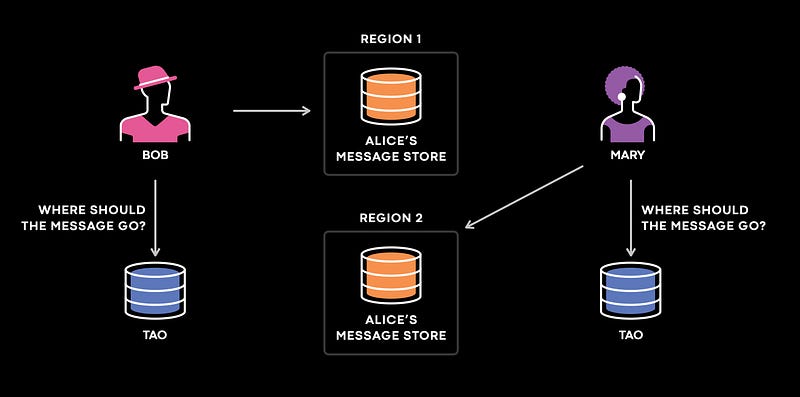

For instance, when a user sends a direct message (DM) on Instagram, there is a mapping to the primary storage where user messages are kept. Imagine three users: Bob in the USA, Mary in Japan, and Alice in Europe. When both Bob and Mary send messages to Alice, the system queries the nearest data store for Alice. If the replicas queried for Bob and Mary contain inconsistent data, the messages may be sent to a region that lacks Alice's messages entirely.

In this scenario, message loss would lead to a subpar user experience, making cache consistency a top priority for Meta.

Monitoring Cache Performance

To address cache invalidation and consistency challenges, the first step is measurement. By accurately assessing cache consistency and alerting when inconsistencies arise, Meta ensures their monitoring avoids false positives, which could lead to engineers disregarding alarms and thereby undermining the metric's reliability.

Prior to examining the solutions Meta implemented, one might consider logging every cache state change. However, with over 10 trillion cache fills daily, such logging would transform a manageable workload into an unmanageable one, complicating debugging efforts.

Polaris: A Solution for Cache Consistency

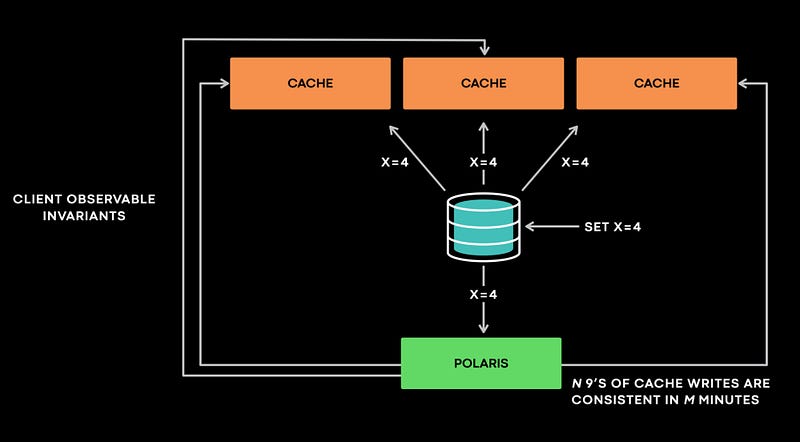

At a high level, Polaris interacts with stateful services as a client, without needing to understand the service internals. It operates on the principle that “the cache should eventually be consistent with the database.” When Polaris receives an invalidation event, it queries all replicas to check for any violations of consistency. For example, if it receives an invalidation event for x = 4, version 4, it checks all cache replicas to ensure compliance. If a replica returns x = 3 at version 3, Polaris flags this as inconsistent and requeues the sample for later verification.

Polaris's design allows it to manage multiple internal queues for implementing backoffs and retries, which is crucial for minimizing false positives.

To better understand Polaris's functionality, consider a scenario where it receives an invalidation for x = 4, version 4. If no entry for x is found during the check, it should flag this as an inconsistency. The two possibilities here are:

- x was absent at version 3, but the version 4 write is the latest.

- A version 5 write might have deleted x, with Polaris obtaining a more recent data view than what the invalidation event indicates.

To determine which case is correct, Polaris queries the database. However, since such queries can be resource-intensive and may expose the database to risks, it postpones these checks until the inconsistent sample exceeds a set threshold, such as 1 or 5 minutes. Thus, Polaris produces metrics indicating cache consistency, such as "99.99999999 cache writes are consistent over five minutes."

Now, let’s examine a coding example that illustrates how cache inconsistencies can be created.

A Simplified Coding Example

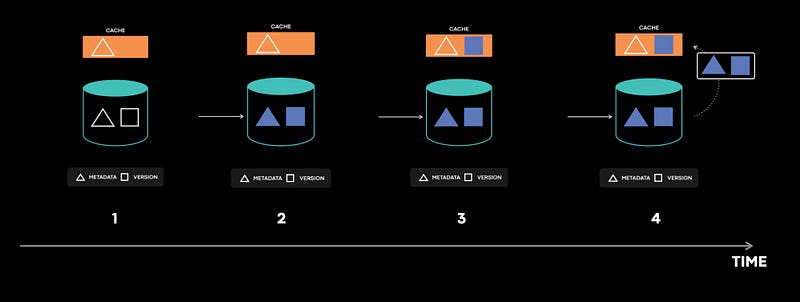

Consider a cache that maintains mappings for key metadata and versions.

cache_data = {}

cache_version = {}

meta_data_table = {"1": 42}

version_table = {"1": 4}

def read_value(key):

value = read_value_from_cache(key)

if value is not None:

return valueelse:

return meta_data_table[key]

def read_value_from_cache(key):

if key in cache_data:

return cache_data[key]else:

fill_cache_thread = threading.Thread(target=fill_cache(key))

fill_cache_thread.start()

return None

def fill_cache_metadata(key):

meta_data = meta_data_table[key]

print("Filling cache meta data for", meta_data)

cache_data[key] = meta_data

def fill_cache_version(key):

time.sleep(2)

version = version_table[key]

print("Filling cache version data for", version)

cache_version[key] = version

def write_value(key, value):

version = version_table.get(key, 1) + 1

write_in_database_transactionally(key, value, version)

time.sleep(3)

invalidate_cache(key, value, version)

def write_in_database_transactionally(key, data, version):

meta_data_table[key] = data

version_table[key] = version

def invalidate_cache(key, metadata, version):

try:

cache_data[key][value] # Potential error hereexcept:

drop_cache(key, version)

def drop_cache(key, version):

cache_version_value = cache_version[key]

if version > cache_version_value:

cache_data.pop(key)

cache_version.pop(key)

In this example, the cache initially returns None and triggers a data fill from the database. Although it appears to be a bug when the version data is filled while the database is updated, the invalidation process should correct the cache's state.

However, if the invalidation fails and the drop cache logic mistakenly removes valid entries, stale metadata can persist indefinitely. This simplified version of the bug illustrates how complex interactions can lead to inconsistencies, with the real-world issue being even more intricate due to database replication and cross-region communications.

Consistency Tracing for Debugging

Upon receiving an alert for cache inconsistencies from Polaris, it’s crucial to review logs to identify potential issues. Instead of logging every cache change, focusing on changes likely to cause inconsistencies can simplify the process.

To investigate, the on-call team should consider:

- Did the cache server receive the invalidation?

- Was the invalidation processed correctly?

- Did any item become inconsistent afterward?

Meta has developed a stateful tracing library that tracks cache mutations during critical interactions that might trigger bugs leading to inconsistencies.

Conclusion

In any distributed system, robust monitoring and logging are essential for promptly identifying and resolving bugs. Using Meta as a case study, Polaris effectively detected anomalies and alerted the team. With the support of consistency tracing, on-call engineers were able to locate the bug in under 30 minutes.

References: